Below are some notes that I made while debugging mod_proxy_balancer. I had to set it up in a hurry when I realized that Amazon Elastic Load Balancer I was using is only capable of sticky session using Cookies. I needed a load balancer that can use a url parameter to maintain sticky session. Thankfully a friend suggest that we use mod_proxy_balancer.

There are lots of material about mod_proxy_balancer, but it is hardly simple to get it working. There are some less know details without which you cannot get it working. I would suggest you take a look at Nginx or other alternatives before choosing mod_proxy_balancer.

Tech stack summary

I had to serve a NodeJS based Javascript API using a Load Balancer capable of Sticky Sessions. The reason for sticky session is beyond scope of this blog :). The entire setup is on Amazon EC2 instances with CentOS.

The whole #!

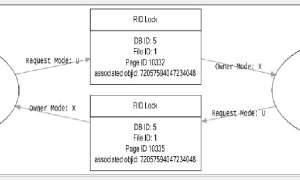

Since I had only two servers to load balance, I assigned them ids s.1 and s.2. It is very important that the routes are named by an alphanumeric prefix, a dot and then a number. Eg: server.1, t.2 etc. The mod_proxy_balancer code splits this route name using the dot and uses the second value as the route number. So s.1 would point to “route=1”.

<Proxy balancer://mycluster/> BalancerMember http://<ip-address-1>:80 route=1 BalancerMember http://<ip-address-2>:80 route=2 </Proxy>

The first request coming to the mod_proxy_balancer is randomly routed to any one of the load BalancerMember. Lets say this request is received by server with route id s.1. The server then serves the request along with its route id (routeId=s.1). All further requests from that browser should now contain the url parameter “routeId=s.1”. Below configuration in bold tells mod_proxy_balancer to read this url parameter and use it to route the request to server 1.

ProxyPass / balancer://mycluster/ lbmethod=byrequests stickysession=_nsrouteid ProxyPassReverse / balancer://mycluster/

That should get things working. How do we know the above setup is working and is sending requests to appropriate servers.

LogLevel warn

LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\" \"%{BALANCER_SESSION_STICKY}e\" \"%{BALANCER_SESSION_ROUTE}e\" \"%{BALANCER_WORKER_ROUTE}e\"" combined

LogFormat "%h %l %u %t \"%r\" %>s %b" common

LogFormat "%{Referer}i -> %U" referer

LogFormat "%{User-agent}i" agent

BALANCER_SESSION_STICKY – This is assigned the stickysession value used for the current request. It is the name of the cookie or request parameter used for sticky sessions

BALANCER_SESSION_ROUTE – This is assigned the route parsed from the current request.

BALANCER_WORKER_ROUTE – This is assigned the route of the worker that will be used for the current request.

I have taken above information from mod_proxy_balancer documentation.

To begin with BALANCER_SESSION_STICKY should be the same as “stickysession” parameter in ProxyPass configuration. The BALANCER_SESSION_ROUTE will not be set for the first request from browser. BALANCER_WORKER_ROUTE will be chosen based on the load balance algorithm.

After first request is served by one of the servers, all further requests sent to the server should have routeId url parameter. The BALANCER_SESSION_ROUTE should show the value in url parameter. It should be “1” when the url parameter is “routeId=s.1”. BALANCER_WORKER_ROUTE will be the same as BALANCER_SESSION_ROUTE. This shows that the requests are sticky.